Mbtiles and Openlayers

I was testing the feasibility of adding an overlay to openlayers map that is displayed on a mobile/tablet device .

The overlay is going to be in mbtiles format the made popular by MapBox.

The mbtiles db will be accessed locally on the device this useful when bandwidth is poor or non 3g tablets .

The mbtiles format is http://www.mapbox.com/developers/mbtiles/ described here.

Its is basically a sqlite database that holds a collection of  x,y,z indexed tiles.

Webkit based browsers including mobile versions support this although its not actually part of the Html5 spec.

The main issue of using mbtiles locally is actually getting the database into the right location.

Another is the speed at which the device can render the images. The overhead in extracting blob images to the resulting base64 encoded images.

There are a couple of ways this can be done however.

Getting Mbtiles on Device/Browser

With Phonegap

You can use the  FileTransfer object in phonegap to copy the database locally from a server. It will be downloaded to the Documents folder on the iphone by default.

http://docs.phonegap.com/en/2.7.0/cordova_file_file.md.html

example code to download an mbtiles db.

var fail = function (error) {

console.log(error.code);

}

var doOnce = window.localStorage.getItem("doOnce");

if(!doOnce){

window.localStorage.setItem("doOnce",'true');

window.requestFileSystem(LocalFileSystem.PERSISTENT, 0, function(fileSystem) {

fileSystem.root.getFile('testDB2.db', {create: true, exclusive: false}, function(fileEntry) {

var localPath = fileEntry.fullPath;

if (device.platform === "Android" && localPath.indexOf("file://") === 0) {

localPath = localPath.substring(7);

}

console.log("LOCAL PATH  "+ localPath);

var ft = new FileTransfer();

ft.download('http://dlib-tahay.ucs.ed.ac.uk/nls2.mbtiles',

localPath, function(entry) {

console.log("successful download");

}, fail);

}, fail);

}, fail);

}

Use the phonegap web sql plugin  https://github.com/pgsqlite/PG-SQLitePlugin-iOS.git and open the database like.

window.sqlitePlugin.openDatabase("'testDB2");

The benefit of using a phonegap sqllite plugin – allows flexibility where you download the mbtile db to and removes the device dependant limits on database size.

Also if a browser drops native web sql support then it doesn’t matter.

Or.

Rather than download a remote database you could copy over a local database at startup.

The simple way to add a prepopulated SQLite DB in PhoneGap from this blog

http://hansjar.blogspot.co.uk/2013/04/how-to-easily-add-prepopulated-sqlite.html

If you want to keep it an entirely non-native web app based solution or desktop browser (webkit based – Chrome Safari you might be able to use a tool like.

https://github.com/orbitaloop/WebSqlSync

There are more suggestion on stackoverflow here but I not tried them.

http://stackoverflow.com/questions/1744522/best-way-to-synchronize-local-html5-db-websql-storage-sqlite-with-a-server-2?answertab=active#tab-top

By using the syncing by creating an empty local mbtiles database and then populating it by inserts via data from the server is going to adversely affect performance. I have not tried this so I dont know how well it would work.

OpenLayers integration

First thing is to subclass an Openlayers TMS class.

/**

* Map with local storage caching.

* @params options:

* Â Â Â Â serviceVersion - TMS service version

*     layerName      - TMS layer name

*     type           - layer type

*     isBaseLayer    - is this the base layer?

*     name         - map name

*     url            - TMS URL

*     opacity        - overlay transparency

*/

var MapWithLocalStorage = OpenLayers.Class(OpenLayers.Layer.TMS, {

initialize: function(options) {

this.serviceVersion = options.serviceVersion;

this.layername = options.layerName;

this.type = options.type;

this.async = true;

this.isBaseLayer = options.isBaseLayer;

if(options.opacity){

this.opacity = options.opacity;

}

OpenLayers.Layer.TMS.prototype.initialize.apply(this, [options.name,

options.url,

{}]

);

},

getURLasync: function(bounds, callback, scope) {

var urlData = this.getUrlWithXYZ(bounds);

webdb.getCachedTilePath( callback, scope, urlData.x, urlData.y , urlData.z, urlData.url);

},

getUrlWithXYZ: function(bounds){

bounds = this.adjustBounds(bounds);

var res = this.map.getResolution();

var x = Math.round((bounds.left - this.tileOrigin.lon) / (res * this.tileSize.w));

var y = Math.round((bounds.bottom - this.tileOrigin.lat) / (res * this.tileSize.h));

var z = this.serverResolutions != null ?

OpenLayers.Util.indexOf(this.serverResolutions, res) :

this.map.getZoom() + this.zoomOffset;

//inverty for openstreetmap rather than google style TMS

var ymax = 1 << z;

var y = ymax - y -1;

var path = this.serviceVersion + "/" + this.layername + "/" + z + "/" + x + "/" + y + "." + this.type;

var url = this.url;

if (OpenLayers.Util.isArray(url)) {

url = this.selectUrl(path, url);

}

return { url: url + path, x:x, y:y, z:z};

},

getURL: function(bounds) {

return OpenLayers.Layer.XYZ.prototype.getURL.apply(this, [bounds]);

},

});

Notes

this.async = true;

as it will have to receive images from the local sqlite database asynchronously  as web sql has an asynchronous callback style API.

var ymax = 1 << z;

var y = ymax – y -1;

All this does is invert the y axis tile to handle openstreetmap not required for google style TMS.

The is a good site that describes the various types of TMS around.

http://www.maptiler.org/google-maps-coordinates-tile-bounds-projection/

The Database Setup

"use strict";

var webdb = {};

function getWebDatabase(){

if(typeof(openDatabase) !== 'undefined'){

if(!webdb.db){

webdb.open();

}

}

else{

webdb = undefined;

}

return webdb;

}

webdb.open = function() {

var dbSize = 50 * 1024 * 1024; // 50MB

webdb.db = openDatabase("'testDB2", "1.0", "Cached Tiles", dbSize);

}

webdb.onError = function(tx, e) {

console.warn("There has been an error: " + e.message);

}

webdb.onSuccess = function(tx, r) {

console.log("Successful Database tx " );

}

webdb.createTablesIfRequired = function() {

console.log("Creating DataBase Tables");

var db = webdb.db;

db.transaction(function(tx) {

tx.executeSql("CREATE TABLE IF NOT EXISTS " +

"tiles(zoom_level INTEGER, tile_column INTEGER, tile_row INTEGER, tile_data TEXT, mapName TEXT)", [], webdb.onSuccess,

webdb.onError);

tx.executeSql("CREATE UNIQUE INDEX  IF NOT EXISTS " +

" tile_index on tiles(zoom_level, tile_column, tile_row, mapName)", [], webdb.onSuccess,

webdb.onError);

});

}

function hexToBase64(str) {

var hexString = str.replace(/([\da-fA-F]{2}) ?/g, "0x$1 ");

var hexArray = hexString.split(" ");

var len = hexArray.length;

var binary ='';

for (var i = 0; i < len; i++) {

binary += String.fromCharCode( hexArray[ i ] )

}

//getting a stack error on large images

//var binary = String.fromCharCode.apply(null, hexArray);

return window.btoa(binary);

}

webdb.getCachedTilePath = function(callback, scope, x, y, z, url ){

var db = webdb.db;

var resultsCallback = function(tx, rs) {

console.log('resultsCallback *********************' );

console.log('rs.rows.length ' + rs.rows.length);

if(callback) {

if( rs.rows.length > 0 ) {

var rowOutput  = rs.rows.item(0);

var tile_data = rowOutput['tile_data'];

//strip off the hex prefix

tile_data = tile_data.substring(2);

callback.call(scope,"data:image/png;base64,"+hexToBase64(tile_data));

} else {

callback.call(scope, url);

}

}

}

db.transaction(function(tx) {

tx.executeSql("SELECT quote(tile_data) as tile_data FROM tiles where zoom_level=? AND tile_column=? AND tile_row=?", [z,x,y], resultsCallback,

webdb.onError);

});

}

Notes

When you have larger blobs in the database you can’t use the overloaded array version of String.fromCharCode as I was getting stack memory issue on the device. (iphone).

So you have to loop through and build it manually.

You have to use the quote function on the tile_data blob to turn it into a hex string.

“SELECT quote(tile_data) as tile_data

Then trim the hex prefix X’ of the hex string before base64ing.

Testing if you just want to test the javascript /html5 with mbtiles you can copy your mbtiles database to the correct folder .

/Users/murrayking/Library/Application Support/iPhone Simulator/6.1/Applications/667F70EF-D002-425D-86C9-5027C965C518/Library/WebKit/LocalStorage/file__0/0000000000000001.db on a mac

or Chrome on mac as well.

Users/murrayking/Library/Application Support/Google/Chrome/Default/databases/http_localhost_8080/13

Overall

This approach is a bit convoluted.

Esp  the conversion of the blob to base64 and performance is a bit poor on older devices. But on newer devices its acceptable. And as devices become more powerful it will become less issue as with all html5 javascript type things.

Not tried it yet on Android but should work. Worked in the Chrome browser on the linux box.

It does allow you to use rich openlayers framework cross platform without having to invest in native versions.

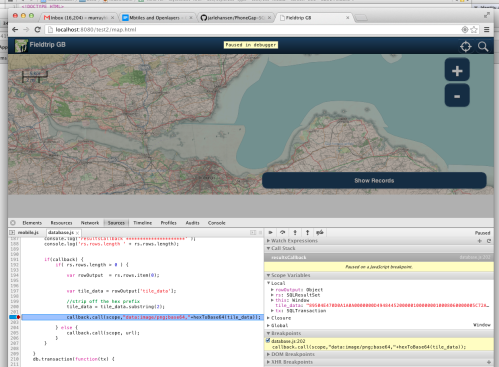

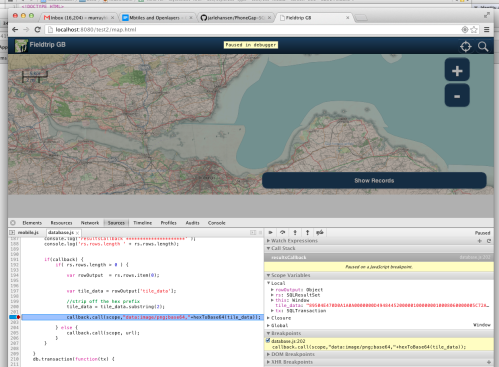

Also you can debug and test using a desktop browser which is fast before doing proper testing on the actual device.

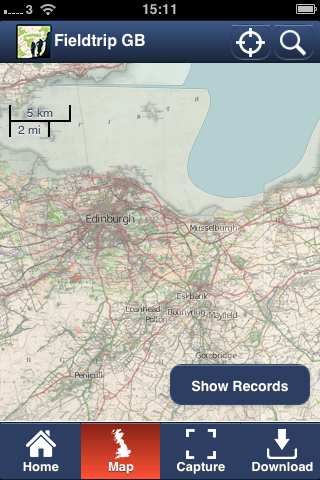

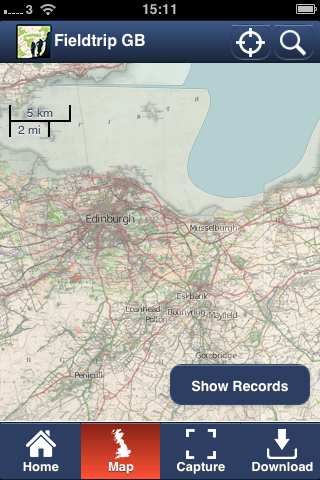

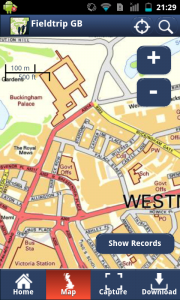

Example Screenshot working on iphone3g using Phonegap and Mbtiles.

Development version based on our Fieldtrip GB app http://fieldtripgb.blogs.edina.ac.uk/ available on android and iphone.

Overlay is historic map in mbtiles format from the National Library of Scotland.

Debugging on Chrome non-native

Last week EDINA had the opportunity to take part in the

Last week EDINA had the opportunity to take part in the